Google Unveils SmallThinker: Powerful AI Models for Memory-Constrained Devices

Summary

Google introduces SmallThinker, a family of powerful AI language models optimized for memory-constrained devices, outperforming larger models on benchmarks while operating smoothly on devices with as little as 1-8GB RAM.

Key Points

- Introduces SmallThinker, a family of efficient large language models natively trained for local deployment

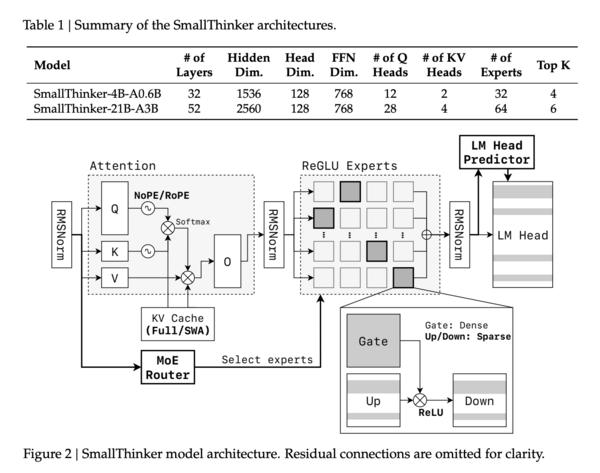

- Employs architectural innovations like fine-grained mixture-of-experts, sparse activations, and hybrid attention for high performance on memory-limited devices

- Outperforms or matches larger models on academic benchmarks while operating smoothly on devices with as little as 1-8GB RAM