Floating-Point Math Quirk and Batch Variability Affect LLM Outputs

Summary

A quirk in floating-point arithmetic and batch variability in large language models (LLMs) can lead to inconsistent outputs, but achieving deterministic inference through batch-invariant kernels for key operations like RMSNorm, matrix multiplication, and attention resolves these issues.

Key Points

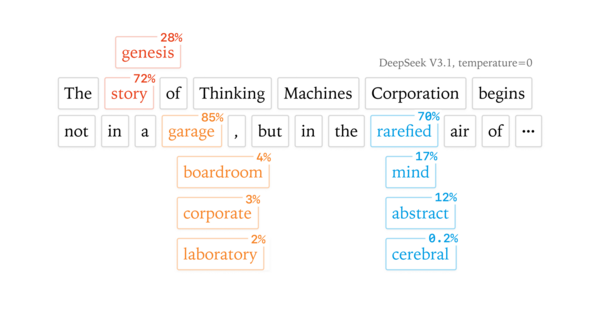

- Floating-point non-associativity leads to different results when numbers are added in different orders.

- LLM inference lacks batch invariance, causing outputs to depend on the batch size and other concurrent requests.

- Achieving batch-invariant kernels for RMSNorm, matrix multiplication, and attention operations enables deterministic LLM inference.