Breakthrough Technique Unlocks Knowledge from Large Language Models

Summary

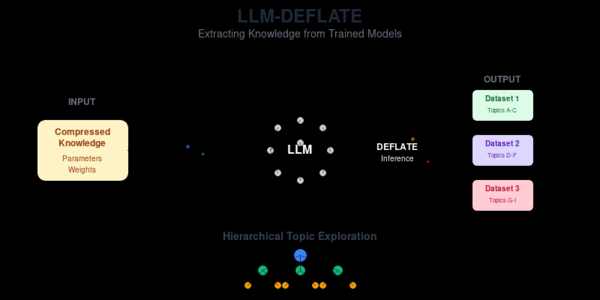

Researchers develop groundbreaking technique to systematically extract factual knowledge and reasoning patterns from large language models like GPT-OSS, Llama 3, and Qwen3-Coder, enabling model analysis, knowledge transfer, and data augmentation.

Key Points

- Large Language Models compress massive training data into parameters, allowing knowledge extraction through systematic decompression.

- Decompression technique uses hierarchical topic exploration to traverse model's knowledge space, extracting factual knowledge and reasoning patterns.

- Approach generates structured datasets from open-source models like GPT-OSS, Llama 3, and Qwen3-Coder, with practical applications in model analysis, knowledge transfer, and data augmentation.