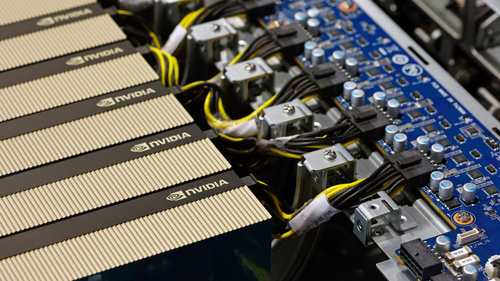

Nvidia Achieves Breakthrough with 4-Bit AI Training, Delivering 6X Speed Boost While Matching Full Precision Results

Summary

Nvidia achieves groundbreaking 4-bit AI training breakthrough with new NVFP4 format, delivering 6X speed boost and 50% memory reduction while matching full precision results on 12-billion-parameter language model, requiring only 1% higher validation loss and 36% less training data than competitors.

Key Points

- Nvidia successfully trains a 12-billion-parameter language model using its new NVFP4 4-bit floating point format, achieving results nearly matching FP8 baseline with only 1% higher validation loss

- NVFP4 delivers 4-6X speed improvements over BF16 and reduces memory consumption by half compared to FP8, while outperforming the competing MXFP4 format by requiring 36% less training data

- The breakthrough requires keeping 15% of model layers in BF16 precision and employs techniques like stochastic rounding and block scaling to maintain training stability with 4-bit precision