Scientists Create Advanced AI System That Reads Text, Images, and Tables Together, Outperforming Traditional Search Methods

Summary

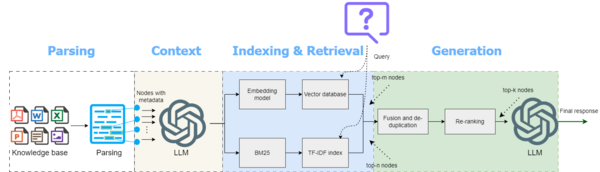

Researchers unveil groundbreaking multimodal AI system that simultaneously processes text, images, and tables from documents, dramatically outperforming traditional search methods by combining advanced parsing technology with hybrid search algorithms and re-ranking capabilities for superior accuracy at reduced costs.

Key Points

- Researchers develop a multimodal RAG system that integrates text, images, and tables from documents using LlamaParse premium mode for advanced parsing and OpenAI's cost-effective gpt-4o-mini model

- The system addresses traditional RAG limitations by adding contextual information to document chunks, combining semantic search with BM25 keyword search, and implementing re-ranking to improve retrieval accuracy

- Performance testing shows the enhanced multimodal system significantly outperforms basic RAG implementations, correctly answering image-based questions while providing a more cost-effective solution than Claude-based alternatives