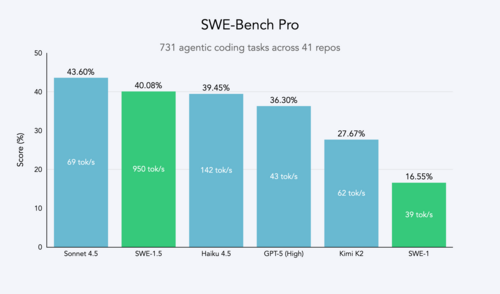

Cognition Launches SWE-1.5 AI Coding Model Running 950 Tokens Per Second, 13x Faster Than Sonnet 4.5

Summary

Cognition unveils SWE-1.5, a breakthrough AI coding model with hundreds of billions of parameters that blazes at 950 tokens per second—13x faster than Sonnet 4.5—while delivering near state-of-the-art performance through advanced reinforcement learning and partnership with Cerebras infrastructure.

Key Points

- Cognition releases SWE-1.5, a frontier-size coding model with hundreds of billions of parameters that achieves near-SOTA performance while running at up to 950 tokens per second through partnership with Cerebras

- The model uses end-to-end reinforcement learning on custom coding environments with three grading mechanisms including classical tests, code quality rubrics, and agentic browser-based testing to avoid AI-generated code issues

- SWE-1.5 trains on GB200 NVL72 chips and integrates with the Cascade agent harness system, delivering 6x faster performance than Haiku 4.5 and 13x faster than Sonnet 4.5 while maintaining frontier-level coding capabilities