NVIDIA Launches Open-Source NeMo Automodel Library Achieving 280 TFLOPs/sec GPU Performance for AI Training

Summary

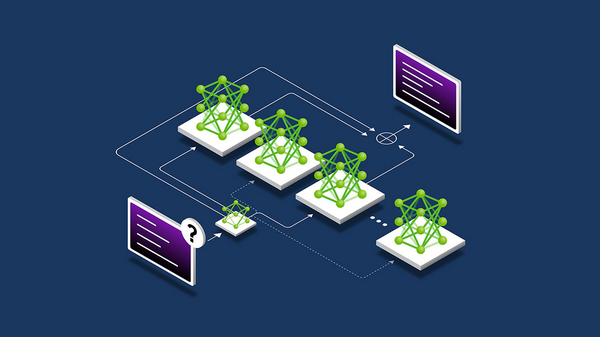

NVIDIA releases open-source NeMo Automodel library that delivers breakthrough 280 TFLOPs/sec GPU performance for AI training, enabling developers to train massive mixture-of-experts models in PyTorch with simplified infrastructure while achieving near-linear scaling across thousands of GPUs.

Key Points

- NVIDIA releases NeMo Automodel, an open-source library that enables developers to train large-scale mixture-of-experts models directly in PyTorch with simplified infrastructure requirements

- The system achieves breakthrough performance of 190-280 TFLOPs/sec per GPU and processes up to 13,000 tokens/sec by leveraging NVIDIA Transformer Engine kernels and advanced optimization techniques

- NeMo Automodel demonstrates near-linear scaling from 8 to over 1,000 GPUs, with DeepSeek V3 671B model reaching 250 TFLOPs/sec per GPU on 256 GPUs while maintaining cost-effective training