DeepSeek's New AI Models Outperform GPT-5 at 70% Lower Cost as US Data Centers Face Power Crisis

Summary

DeepSeek's breakthrough AI models crush GPT-5 performance while slashing costs by 70% through revolutionary sparse attention technology, as former OpenAI co-founder Ilya Sutskever declares the era of simply building bigger AI models dead and US power grids buckle under exploding data center demands projected to double by 2035.

Key Points

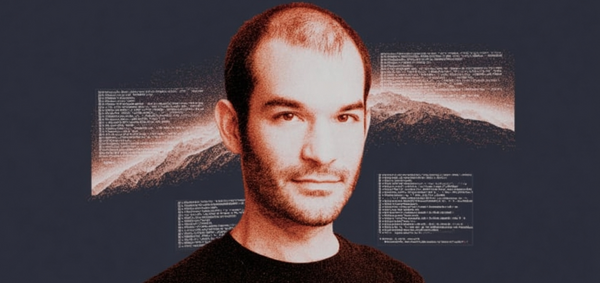

- Ilya Sutskever challenges scaling laws for achieving AGI, arguing that making LLMs larger doesn't inherently make them better and calling for a return to research-focused approaches rather than pure scaling

- Chinese AI company DeepSeek releases two new models that outperform OpenAI's GPT-5 and rival Google's Gemini 3.0 on benchmarks while operating at 70% lower inference costs through efficient sparse attention technology

- US data center power demand is projected to more than double to 106 gigawatts by 2035 driven by AI growth, creating potential grid capacity challenges and shifting data center geography away from saturated regions