DeepSeek Unveils Revolutionary AI Architecture That Boosts Language Model Performance with Minimal Hardware Cost

Summary

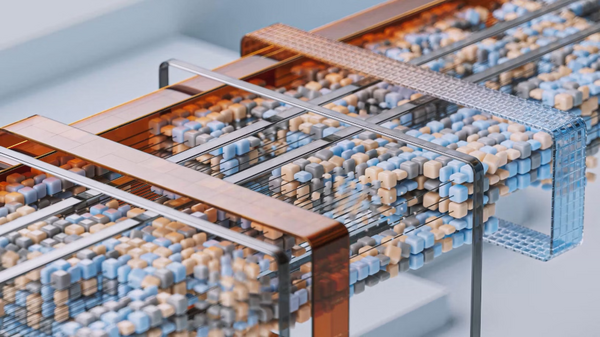

DeepSeek researchers unveil Manifold-Constrained Hyper-Connections (mHC), a breakthrough AI architecture that uses mathematical manifolds to dramatically boost language model performance across all tested sizes while adding only 6.27% hardware overhead costs.

Key Points

- DeepSeek researchers develop Manifold-Constrained Hyper-Connections (mHC), a new AI architecture that enhances the residual connection mechanism used by large language models to learn information

- The mHC technology uses mathematical objects called manifolds to maintain gradient stability as signals travel between AI model layers, improving upon previous Hyper-Connections technology

- Testing shows mHC-powered language models with 3, 9, and 27 billion parameters outperform standard models across eight benchmarks while requiring only 6.27% hardware overhead