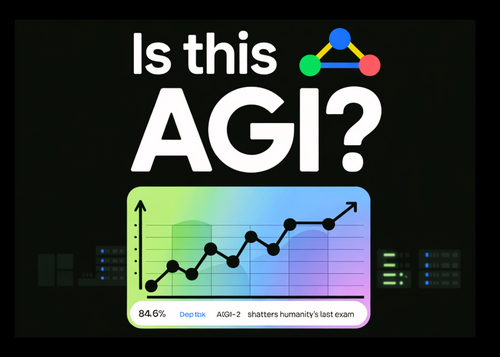

Google's Gemini 3 Deep Think Shatters AI Benchmarks, Scoring 84.6% on Human Reasoning Test and Achieving Grandmaster Programming Level

Summary

Google's Gemini 3 Deep Think AI model achieves unprecedented 84.6% score on human reasoning tests, surpassing average human performance by 24 percentage points while reaching Legendary Grandmaster programming status and earning gold medal-level results on international science and math competitions.

Key Points

- Google's Gemini 3 Deep Think achieves 84.6% on ARC-AGI-2 benchmark, surpassing the human average of 60% and representing a massive leap from previous AI models that struggled to break 20%

- The model reaches Legendary Grandmaster level in competitive programming with a 3455 Elo score on Codeforces and scores 48.4% on Humanity's Last Exam without external tools

- Gemini 3 Deep Think earns gold medal-level performance on 2025 International Physics, Chemistry, and Math Olympiads while demonstrating practical engineering capabilities like converting sketches into 3D-printable objects