Netflix Develops Internal Framework That Boosts AI Model Training Speed by 4.7x

Summary

Netflix unveils a revolutionary internal Post-Training Framework that accelerates AI model training by up to 4.7x through advanced optimizations like on-the-fly sequence packing, enabling the streaming giant to scale large language model fine-tuning from experimental phases to full production deployment across distributed GPU clusters.

Key Points

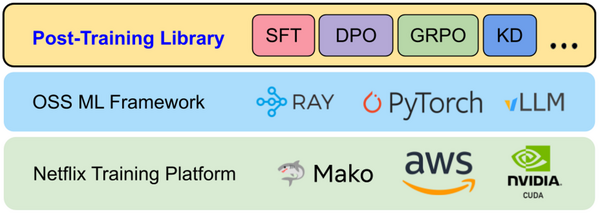

- Netflix builds an internal Post-Training Framework to scale LLM fine-tuning from experimentation to production, addressing complex data pipelines, distributed GPU clusters, and multi-stage workflows that standard tools cannot handle

- The framework supports advanced training methods including Supervised Fine-Tuning, Direct Preference Optimization, and Reinforcement Learning through a hybrid architecture that evolved from simple SPMD execution to active controller orchestration

- Netflix achieves up to 4.7x throughput improvements through optimizations like on-the-fly sequence packing and automatic vocabulary padding while maintaining compatibility with Hugging Face ecosystem standards