AI Security Breakthrough: New System Detects Malicious Prompts by Reading AI's Internal Thoughts, Outperforms Existing Guards

Summary

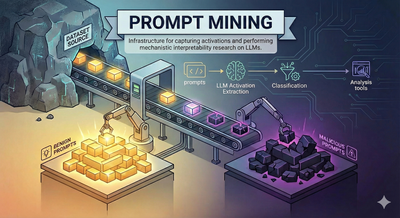

Zenity Labs develops groundbreaking AI security system that reads language models' internal thoughts to detect malicious prompts, outperforming existing guards like Prompt-Guard-2 in stopping jailbreaks and prompt injections despite higher false positive rates.

Key Points

- Zenity Labs develops a maliciousness classifier that analyzes LLM internal activations rather than just input/output, using Llama-3.1-8B-Instruct with logistic regression to detect malicious prompts with interpretable explanations

- The system undergoes rigorous out-of-distribution testing by holding entire datasets out of training, revealing significantly lower real-world performance compared to traditional train-test splits that can mislead security evaluations

- When compared to existing solutions like Prompt-Guard-2 and Llama-Guard-3-8B, the activation-based approach shows superior performance in detecting jailbreaks and indirect prompt injections, though benign false positive rates remain high at 3.7-6.8%