Exponential Error Rates and Quadratic Costs Undermine AI Agents at Scale

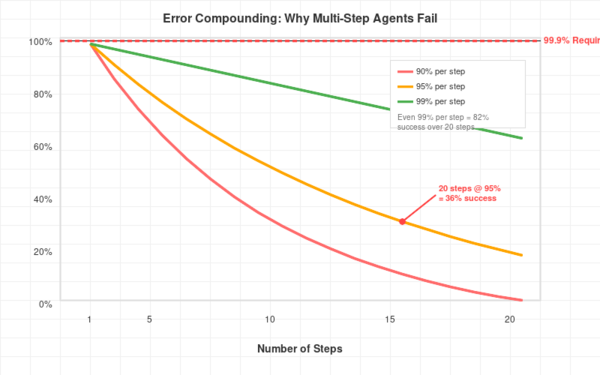

Exponentially compounding error rates and quadratically scaling costs render multi-step autonomous AI workflows mathematically and economically infeasible at scale, underscoring the pressing need to develop effective human-AI collaboration tools and feedback systems.