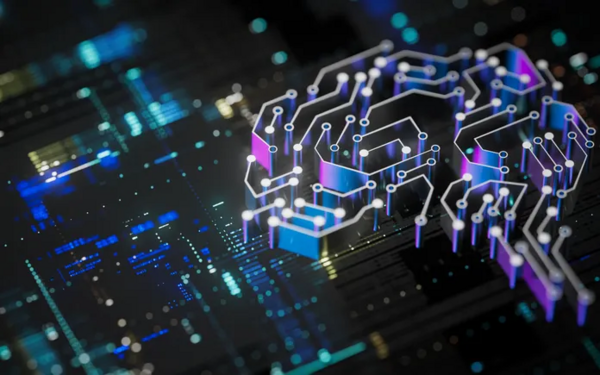

New Transformer Models Tackle Quadratic Complexity, Enabling Longer Sequences

New Transformer models employ techniques like sparse attention, low-rank factorization, and kernel approximations to overcome quadratic complexity, enabling efficient processing of longer sequences while preserving long-range dependency modeling capability.