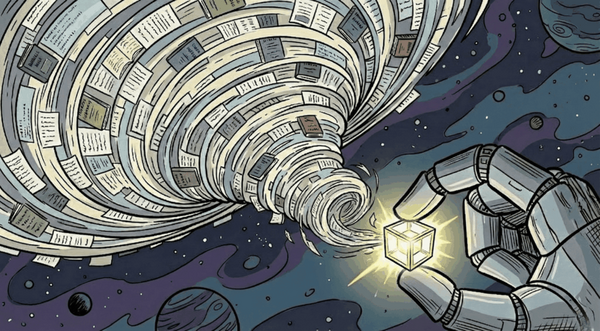

Google Develops Infini-Attention AI That Processes Infinite Context Using 114× Less Memory

Google researchers unveil Infini-attention AI architecture that processes unlimited context while using 114 times less memory by compressing conversation histories into fixed-size matrices, successfully handling up to 1 million tokens and achieving breakthrough performance on book summarization and information retrieval tasks.