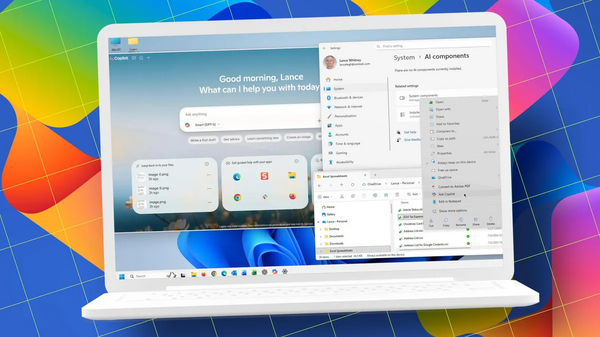

AI Systems Transform Data Analysis Into Star Trek-Style Conversations, Enabling Instant Visual Reports From Simple Questions

AI systems now enable Star Trek-style conversations with data, allowing users to ask simple questions like 'show me last quarter's sales trend' and instantly receive visual reports, transforming traditional data analysis from lengthy analyst-commissioned reports into immediate conversational interactions powered by Large Language Models.